Wait But Why introduces the concept of being a multi-agent system in a nice accessible way. You can see it being introduced in the second panel.

I’ll gloss over one of the important oversimplifications that it makes for the sake of narrative convenience. There are clearly not two separate creatures within your brain each with their own brain as is depicted. This can cause confusion in other contexts – such as asking which part of my brain is really “me”. But it won’t come up in this post.

The other distortion is viewing the antagonist as a monkey. This conjures up the image of a creature that’s super annoying and capable of some basic strategizing but still fundamentally dumber than you are.

I don’t think my monkey is dumb. I don’t think my monkey is dumb at all.

Other models that I’ve seen present the antagonist as more of a Mr. Hyde. Super smart, willing and capable of all kinds of underhanded tactics that wouldn’t even occur to you, and with some apparently bizarre desires and motives.

With a monkey, you can empirically observe how he behaves in different situations and then try and plan around him accordingly. If he responds to the panic monster in the way shown in Wait But Why, then you can try introducing more panic into your life if you feel you have the stamina to cope with that. If he responds to other incentives as well then you can explore those.

I feel like my monkey would put the kibosh on me trying to threaten him with the panic monster.

Take Beeminder.

It can be viewed as a game between the official personality – let’s call him Dr. Mockito – and the Mr. Hyde character. In the case of Beeminder the game is not a trade – it has more of the structure of blackmail.

What is blackmail in the context of game theory?

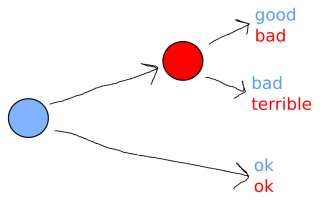

It looks something like this picture. The circles represent decision points for each player; the coloured outcomes at the end given an indication of the utility received by each player if the game goes that way.

In this case the first player, blue, gets to choose between a neutral outcome shown on the lower path and the “blackmail” setup shown on the upper. The blackmail situation makes things worse for red – red can now only choose between bad and terrible, the “ok” option is not available.

Blue is hoping that when put in this situation, red is going to choose the better of the two evils. Along this top path blue receives “good” – the best possible outcome for blue. Red, however, has the option at this point to make things bad for everyone – red receives the “terrible” outcome so definitely loses, but blue also receives “bad” and hence regrets engaging in the blackmail. Creating a blackmail situation is always somewhat costly for the initiator.

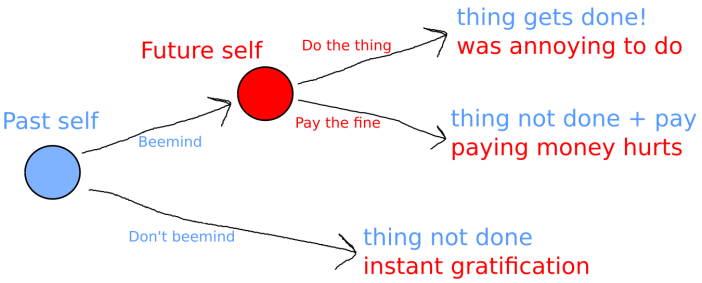

Beeminder has the same structure. I’ll draw the same picture again with different labels to make this clearer.

The “past self” and “future self” are of course named relative to each other. Past self is the one who decided to Beemind in the first place, and corresponds to Dr. Mockito. Future self is the one who has to do the actual work and corresponds to Mr. Hyde.

Note that it’s the “good guy” here who’s committing “blackmail”. I’ll stay neutral on the interpretation of that, for now, and just note that this is what I meant and I didn’t label things the wrong way around or something.

Now.

There’s this thing called Causal Decision Theory (CDT) and it’s pretty much the gold standard amongst boring people as to how decisions should get made. It says that when you get a game tree like this one, you view each of the little branches as a subgame, solve that one first, and then work backwards.

In the case of the blackmail game, the subgame with the red node at the head is easy to solve. Red chooses “outcome that’s merely bad for me and good for blue” which would be “do the thing” in the Beeminder game. (It’s explicitly part of Beeminder that the fine is supposed to be set at a point where it’s more scary or painful than actually doing the work would be).

And so given that the outcome of this subgame would be “bad for red and good for blue”, it’s CDT-rational for blue to choose the blackmail option, e.g. to start beemindering.

The main competitor to CDT is not that thing called “Evidential Decision Theory”, that’s just a pile of crap and you can ignore it. The competitor instead is all that cool stuff coming out of MIRI which I hope to be studying fairly seriously soon.

Those kinds of decision theory often concern themselves with setups where agents can peer into each other and in particular figure out what decisions each other would make in different circumstances. To some extent this is true in a CDT analysis as well – the agents can see each others’ utility functions after all, which is not a physical feature of the world but of the other agent’s motivations. The CDT analysis goes on to assume that as soon as you call something an “agent” with a “utility function” it must adhere to CDT and then everything follows from that. The “timeless”-style decision theories from MIRI do not make that kind of assumption.

In those kinds of analysis, if red had the ability to credibly signal that he was going to choose the “terrible” option when faced with blackmail, then blue would see this and so the blackmail would never actually happen. Red would end up winning. It’s not quite that simple – if blue somehow had the ability to commit to blackmailing before red could commit to not being taken in by it, then red wouldn’t win and so the actual outcome between genuinely intelligent agents might depend very subtly on what exactly the two agents are allowed to signal to each other, and when, and how.

Let’s just say that such a precommitment by red wouldn’t obviously be irrational, but is inherently somewhat risky.

This is somewhat beside the point though.

The Beeminder game is not blackmail. It’s iterated blackmail.

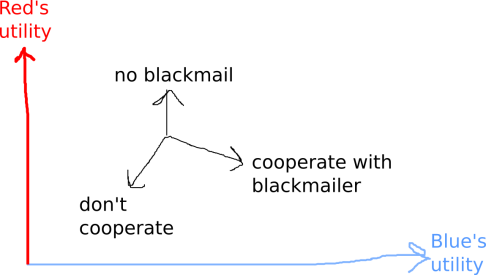

This is intended to show the three possible outcomes of a very non-extreme version of blackmail – basically “please do me a small favour and I won’t go out of my way to do something annoying”.

A particular feature of note is that according to red, not cooperating with the blackmailer doesn’t end up that much worse than cooperating. In the one-shot causal decision theory version, this would still cause red to cooperate – that outcome is still better after all. But in the iterated version, just as with prisoner’s dilemma, the CDT worldview is a lot less compelling.

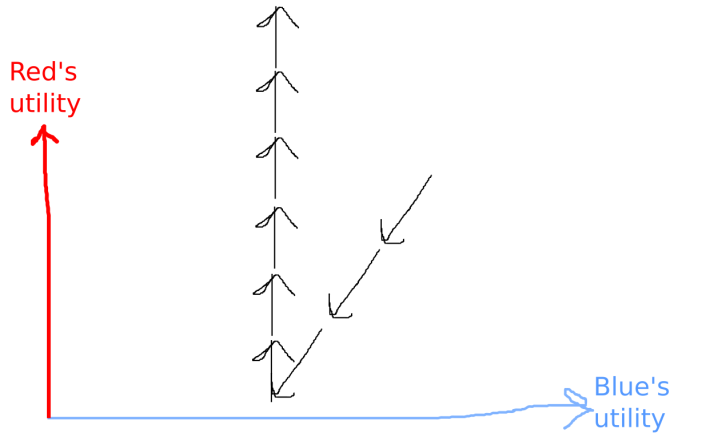

In this example iterated play, Blue starts off all aggressive with the blackmailing. Red is having nothing of it and just refuses to cooperate, losing a ton of utility initially. But blue gets fed up of spending all his own utility on blackmail that doesn’t seem to be working (remember in the real world blue would have imperfect information about red’s utility function so a bluffing strategy by red might be particularly effective). Blue gives up and doesn’t blackmail red for the entire rest of the game. Red ends up doing well – certainly better than if blue had continued blackmailing him and he’d continued to cooperate. Blue fails to get any of the utility that he would have got from a successful blackmail but still does better than if he’d added another failed blackmail to the chain. (And worse than if he’d never done any blackmailing in the first place). In this game it’s not even clear who’s blackmailing who any more.

This I think is something like what happened with me and Beeminder. And this feels like something of an insight.

I haven’t talked about this to Daniel yet, but in my previous discussion he brought up the idea that giving up and paying the fine in Beeminder was irrational, and I didn’t really question that. This analysis shows that this is really only true if you define rationality in the very narrow sense of causal decision theory (which produces some very pathological outcomes in iterated games such as this or iterated prisoners dilemma).

In my model Mr. Hyde is not irrational. He has some strange motives – he’d rather lounge around than exercise or complete some small todo that’s written on a post-it. He’d rather not pay the Beeminder fine but it’s not so terribly bad that he can’t play some game theory around it. And it worked – I gave up Beeminder, continuing to lounge around not exercising or being a slave to my todo list.

Don’t underestimate the monkey. He’s a cunning one.

Great post! I like your game-theoretic definition of blackmail. In the context of Beeminder, let me quote myself from http://forum.beeminder.com/t/rebuttal-to-sinceriouslys-self-blackmail/2784 :

> If there were literally 2 distinct agents in a noncooperative game then I think you’d be right. Don’t cave to the blackmail, pay the penalty. Because then your adversary loses the incentive to continue to blackmail you. So my objection is that the agents aren’t distinct or adversarial. For the most part I just do what Beeminder tells me to when it tells me to do it and I feel great about that, even in the moment!

Of course, that’s what works for me, and your point is that it didn’t work for you. I’m now wondering if Nick Winter’s idea of success spirals — http://blog.beeminder.com/nick — could work better for you.

LikeLike

yeah I’m not sure my little game tree of blackmail is completely what it’s about, I feel like there was some other scenario that has the same payoff structure and didn’t feel blackmaily at all but I don’t remember what it is right now but just be warned about that before you go using it to impress all your friends.

It seems like the extent to which our minds can be modeled as multiple agents that act antagonistically is something that people differ on, and maybe the less cooperative ones do worse at beeminder. That’s all pretty abstract though and I don’t know straight off how to turn it into something falsifiable or actionable.

The Nick Winter post contains a remark dripping with so much wisdom it clearly has implications way beyond Beeminder.

“See, the real cost of failing a goal is not the loss of your Beeminder pledge money. It’s the loss of confidence that you will meet all future goals that you perceive as similar to the current goal.”

^^ I feel like something like that is where I tend to go wrong each time, whether it’s with Beeminder or anything else that requires maintaining a particular attitude. And it’s not like I can’t see it happening ahead of time either.

LikeLike

That makes a ton of sense about how people differ in how antagonistic their different selves are. I think it requires hardly any antagonism to yield egregious akrasia. Like where you endlessly procrastinate but small changes in your environment suffice to get you on board with your long-term goals. I think Beeminder handles the case of a fair bit of antagonism between selves but sufficient antagonism will thwart even Beeminder. I think Beeminder plus social support (like friends keeping an eye on your graphs, and Beeminder’s supporters feature) can handle even more instra-self antagonism.

And, yeah, Nick Winter is pretty brilliant. 🙂 I’m so impressed with him I invested in his latest startup (CodeCombat).

LikeLike

Oh, and automatic data sources is maybe another step up in handling more intra-self antagonism.

ps, i see i had a typo in my previous use of that phrase.

LikeLike

Can you explain that? About how automatic data sources help?

LikeLike

I guess I was thinking more of countering weaseling, which you, impressively, have never had a problem with. See http://blog.beeminder.com/cheating

And maybe having an automatic data source also could mitigate intra-self antagonism by keeping the focus away from the commitment contract. Like maybe you could train up the habit of keeping engaged with the activity enough to keep Beeminder completely quiet — so for the most part you could forget Beeminder was even minding you at all.

LikeLike